Navigating the Complexities of Modern Computing: A Comprehensive Guide to MapReduce and Its Impact

Related Articles: Navigating the Complexities of Modern Computing: A Comprehensive Guide to MapReduce and Its Impact

Introduction

In this auspicious occasion, we are delighted to delve into the intriguing topic related to Navigating the Complexities of Modern Computing: A Comprehensive Guide to MapReduce and Its Impact. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: Navigating the Complexities of Modern Computing: A Comprehensive Guide to MapReduce and Its Impact

- 2 Introduction

- 3 Navigating the Complexities of Modern Computing: A Comprehensive Guide to MapReduce and Its Impact

- 3.1 Understanding MapReduce: A Paradigm for Distributed Computing

- 3.2 The Architecture of MapReduce: A Collaborative Ecosystem

- 3.3 Applications of MapReduce: Unlocking Insights from Big Data

- 3.4 Advantages of MapReduce: Efficiency, Scalability, and Fault Tolerance

- 3.5 Beyond MapReduce: The Evolution of Distributed Computing

- 3.6 FAQs: Addressing Common Queries on MapReduce

- 3.7 Tips for Effective MapReduce Implementation

- 3.8 Conclusion: A Legacy of Innovation and Continued Relevance

- 4 Closure

Navigating the Complexities of Modern Computing: A Comprehensive Guide to MapReduce and Its Impact

The rapid evolution of data generation and processing has driven a need for sophisticated tools capable of handling massive datasets. One such tool, MapReduce, has emerged as a cornerstone of modern data analysis, revolutionizing the way we process information and extract valuable insights. This comprehensive guide delves into the intricacies of MapReduce, exploring its architecture, applications, and the profound impact it has had on the field of computer science.

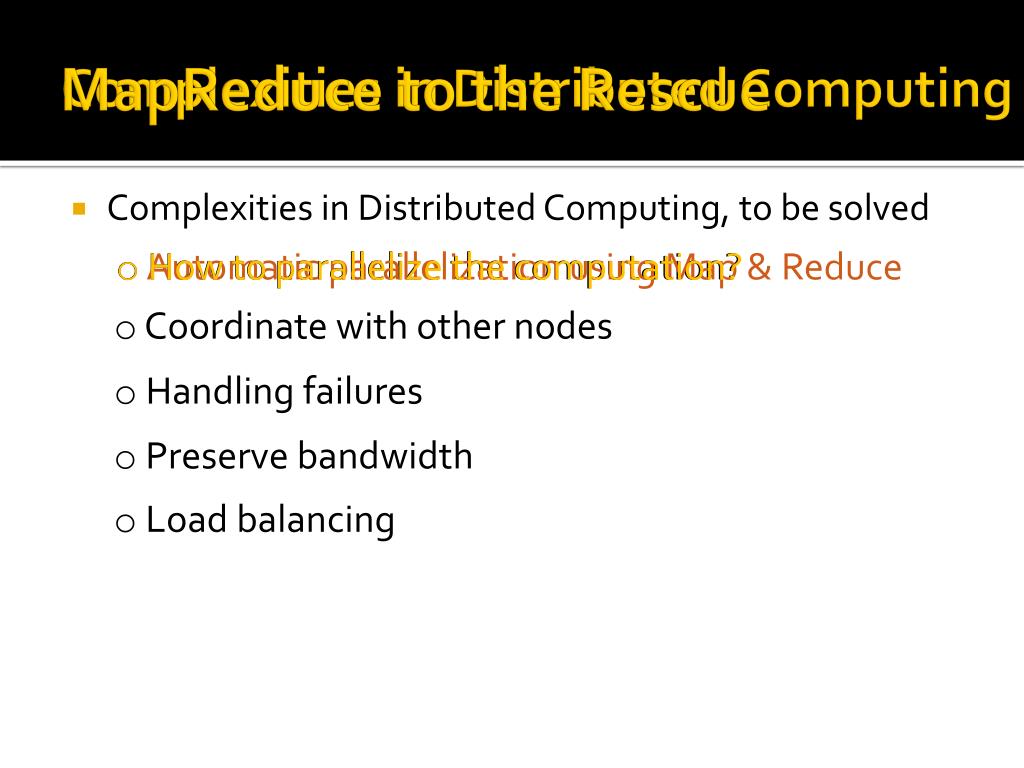

Understanding MapReduce: A Paradigm for Distributed Computing

At its core, MapReduce is a programming model designed for processing large datasets across a cluster of computers. It divides complex tasks into smaller, independent units that can be executed concurrently, leveraging the collective processing power of multiple machines. This distributed approach allows for the efficient handling of data volumes that would overwhelm traditional single-machine systems.

The model itself is built upon two fundamental operations:

1. Map: This phase takes the input data and transforms it into key-value pairs. The "map" function applies a specific transformation rule to each data element, generating a set of key-value pairs.

2. Reduce: The "reduce" phase aggregates the key-value pairs generated in the map phase. It groups together values associated with the same key, applying a user-defined function to combine them into a single output value.

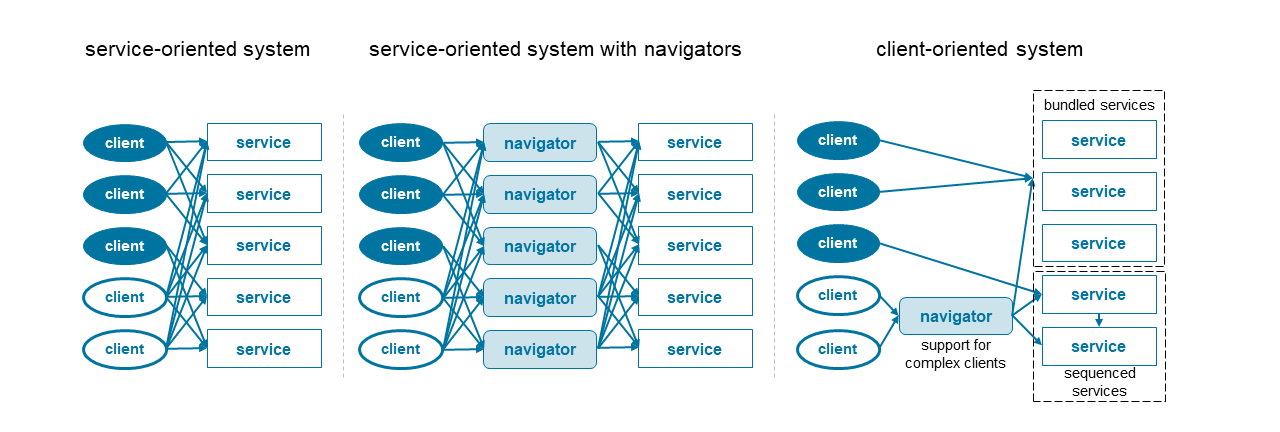

The Architecture of MapReduce: A Collaborative Ecosystem

The implementation of MapReduce involves a collaborative ecosystem of components that work together to achieve the desired processing outcome. These components include:

1. Client: This component initiates the MapReduce job by submitting the program code and input data to the cluster.

2. JobTracker: This component acts as the central coordinator, managing the execution of MapReduce jobs. It assigns tasks to individual nodes in the cluster, monitors their progress, and handles failures.

3. TaskTracker: This component resides on each node in the cluster and executes the assigned map and reduce tasks. It communicates with the JobTracker to report progress and receive further instructions.

4. Distributed File System (DFS): This component provides a shared storage system for the input data, intermediate results, and final output. It ensures data availability and fault tolerance.

Applications of MapReduce: Unlocking Insights from Big Data

The versatility of MapReduce has made it an indispensable tool across a wide range of applications, including:

1. Web Search: MapReduce powers the indexing and ranking of web pages, enabling search engines to efficiently process vast amounts of data and deliver relevant results to users.

2. Social Media Analysis: Analyzing user interactions, trends, and sentiment in social media platforms relies heavily on MapReduce to handle the massive volume of data generated by millions of users.

3. Scientific Computing: Researchers leverage MapReduce to analyze large datasets in fields like genomics, climate modeling, and astrophysics, accelerating scientific discovery.

4. Fraud Detection: Financial institutions employ MapReduce to identify suspicious patterns and transactions in their data, mitigating financial risks.

5. Recommendation Systems: E-commerce platforms utilize MapReduce to analyze user behavior and preferences, providing personalized product recommendations.

Advantages of MapReduce: Efficiency, Scalability, and Fault Tolerance

The adoption of MapReduce is driven by its inherent advantages:

1. Efficiency: The parallel processing capabilities of MapReduce significantly reduce the time required for data processing, enabling faster insights and analysis.

2. Scalability: The distributed architecture allows MapReduce to handle ever-growing datasets by adding more nodes to the cluster, seamlessly scaling to accommodate increasing data volumes.

3. Fault Tolerance: The system is designed to handle node failures without compromising data integrity. If a node fails, the JobTracker automatically reassigns its tasks to other available nodes, ensuring job completion.

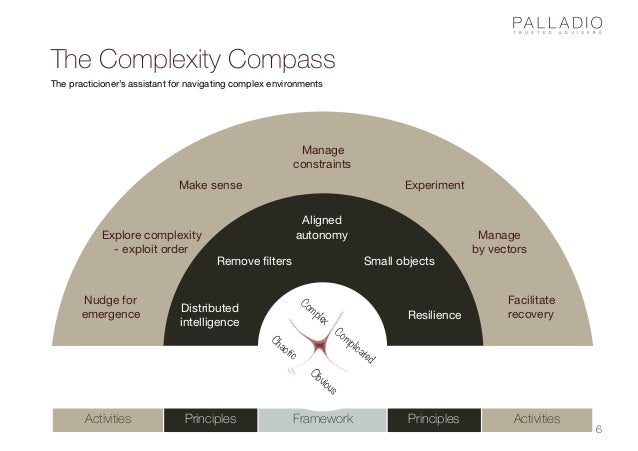

Beyond MapReduce: The Evolution of Distributed Computing

While MapReduce has revolutionized distributed computing, it is not without limitations. Its rigid programming model can be challenging for complex tasks, and its reliance on a centralized JobTracker can become a bottleneck in large clusters.

These limitations have spurred the development of alternative frameworks, such as Apache Spark and Apache Flink, which offer greater flexibility and performance. These frameworks build upon the core principles of MapReduce, incorporating advanced features like in-memory processing, iterative computation, and more sophisticated data structures.

FAQs: Addressing Common Queries on MapReduce

1. What are the key differences between MapReduce and other distributed computing frameworks like Spark and Flink?

While all these frameworks address the challenge of processing large datasets, they differ in their programming models, performance characteristics, and capabilities. MapReduce is a batch-oriented framework, suitable for processing large datasets in a single pass. Spark and Flink, on the other hand, offer more flexibility, supporting both batch and real-time processing. Spark, in particular, leverages in-memory computation for faster processing, while Flink excels in handling continuous data streams.

2. How does MapReduce handle data integrity and fault tolerance?

MapReduce ensures data integrity through a combination of data replication and checksum verification. The input data is replicated across multiple nodes in the cluster, ensuring its availability even if a node fails. Additionally, checksums are calculated for each data block, allowing the system to detect and correct data corruption.

3. What are the limitations of MapReduce?

MapReduce’s rigid programming model and reliance on a centralized JobTracker can pose challenges in complex scenarios. The batch processing nature of MapReduce can be inefficient for real-time applications, and the centralized JobTracker can become a bottleneck in large clusters.

4. What are the best use cases for MapReduce?

MapReduce is well-suited for tasks involving batch processing of large datasets, such as web search, social media analysis, scientific computing, and fraud detection. Its simplicity and scalability make it an effective choice for these applications.

5. Is MapReduce still relevant in the era of cloud computing?

While cloud computing platforms offer managed services for distributed computing, MapReduce remains a valuable tool for processing large datasets. Its simplicity and robustness make it a suitable choice for tasks that require scalability and fault tolerance.

Tips for Effective MapReduce Implementation

1. Optimize Data Partitioning: Choose an appropriate partitioning scheme to ensure balanced data distribution across nodes, maximizing parallelism and processing efficiency.

2. Minimize Data Shuffle: Minimize the amount of data shuffled between map and reduce phases, as it can significantly impact performance.

3. Leverage Combiners: Use combiners to aggregate intermediate results locally, reducing the amount of data transferred between nodes.

4. Monitor Job Performance: Regularly monitor job progress and resource utilization to identify bottlenecks and optimize performance.

5. Consider Alternative Frameworks: For complex tasks or real-time applications, explore alternative frameworks like Spark or Flink, which offer greater flexibility and performance.

Conclusion: A Legacy of Innovation and Continued Relevance

MapReduce has fundamentally transformed the way we process and analyze data. Its innovative approach to distributed computing has paved the way for a new era of data-driven decision making, enabling organizations to extract valuable insights from massive datasets. While newer frameworks have emerged, MapReduce’s legacy continues to shape the landscape of distributed computing, serving as a foundation for future advancements in data processing and analysis. As data volumes continue to grow exponentially, the principles and concepts embodied in MapReduce will remain crucial for unlocking the potential of big data and driving innovation in various fields.

![Apache Spark vs Hadoop MapReduce - Feature Wise Comparison [Infographic] - DataFlair](https://data-flair.training/blogs/wp-content/uploads/sites/2/2016/09/Hadoop-MapReduce-vs-Apache-Spark.jpg)

Closure

Thus, we hope this article has provided valuable insights into Navigating the Complexities of Modern Computing: A Comprehensive Guide to MapReduce and Its Impact. We appreciate your attention to our article. See you in our next article!