The Power of Memory Mapping: Understanding mmap in Unix Systems

Related Articles: The Power of Memory Mapping: Understanding mmap in Unix Systems

Introduction

With enthusiasm, let’s navigate through the intriguing topic related to The Power of Memory Mapping: Understanding mmap in Unix Systems. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: The Power of Memory Mapping: Understanding mmap in Unix Systems

- 2 Introduction

- 3 The Power of Memory Mapping: Understanding mmap in Unix Systems

- 3.1 The Essence of Memory Mapping

- 3.2 Understanding the Mechanics

- 3.3 The Advantages of Memory Mapping

- 3.4 Applications of Memory Mapping

- 3.5 Challenges and Considerations

- 3.6 Understanding mmap: Frequently Asked Questions

- 3.7 Tips for Effective Memory Mapping

- 3.8 Conclusion

- 4 Closure

The Power of Memory Mapping: Understanding mmap in Unix Systems

The Unix operating system provides a powerful and versatile tool for managing memory, known as memory mapping. This mechanism, implemented through the mmap() system call, allows programs to directly access and manipulate files in memory, blurring the lines between file storage and RAM. This article delves into the intricacies of memory mapping in Unix systems, exploring its functionality, benefits, and real-world applications.

The Essence of Memory Mapping

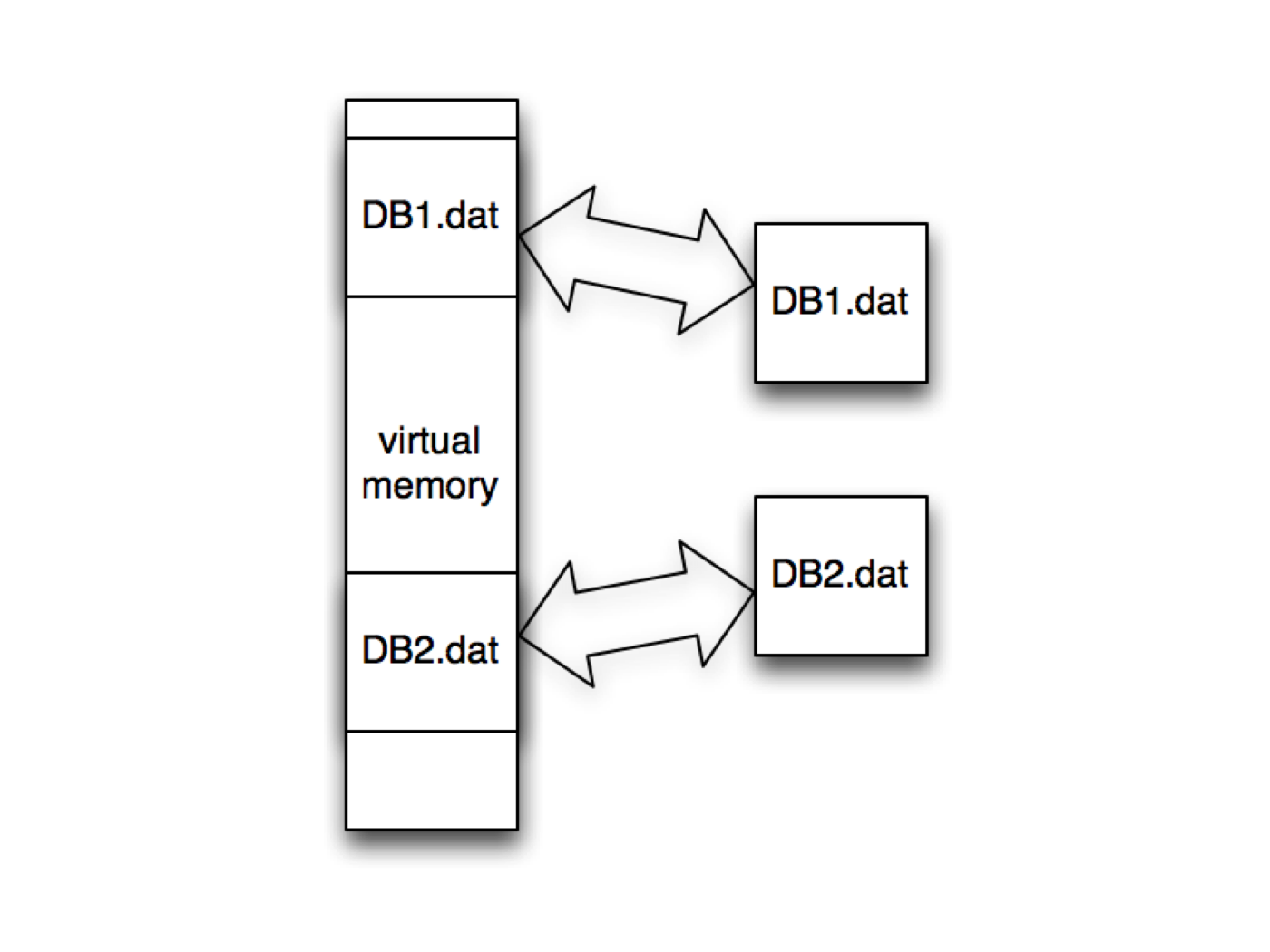

At its core, memory mapping allows a program to establish a direct correspondence between a region of its address space and a file on disk. This connection, established through the mmap() system call, grants the program the ability to treat the file as if it were a segment of its own memory. This eliminates the need for traditional file I/O operations like read and write, streamlining data access and enhancing performance.

Understanding the Mechanics

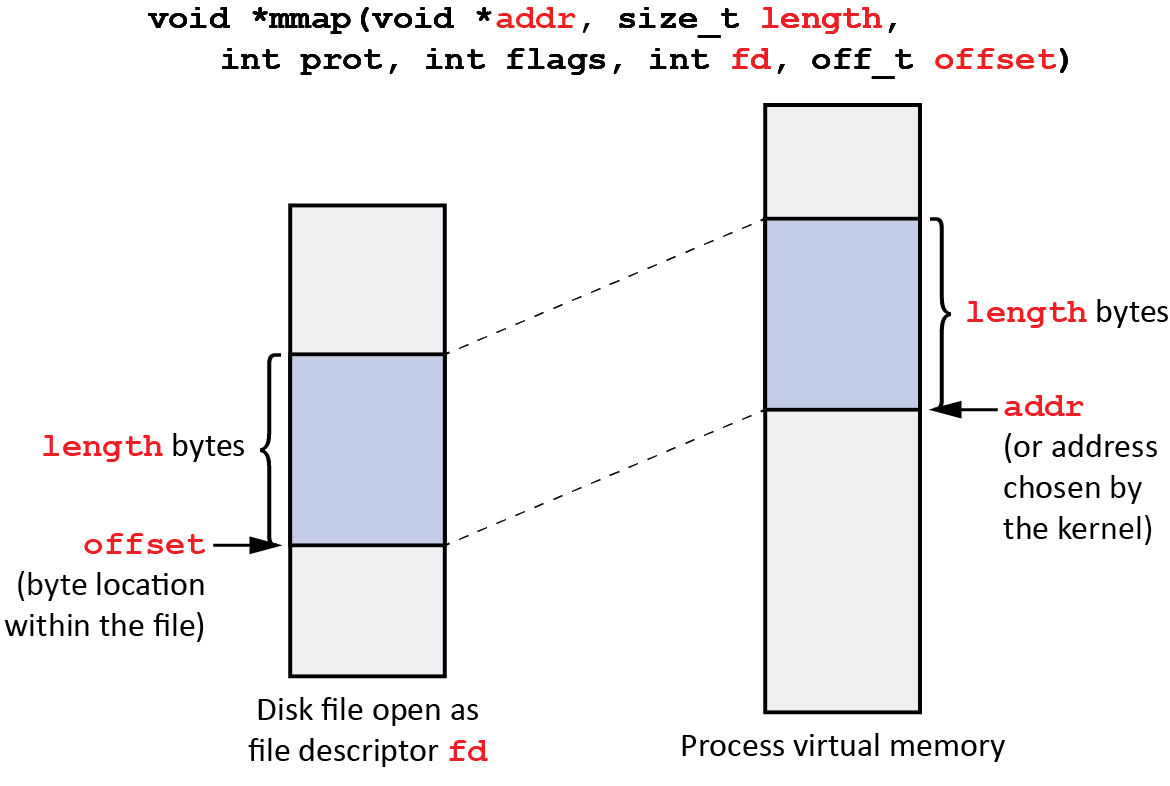

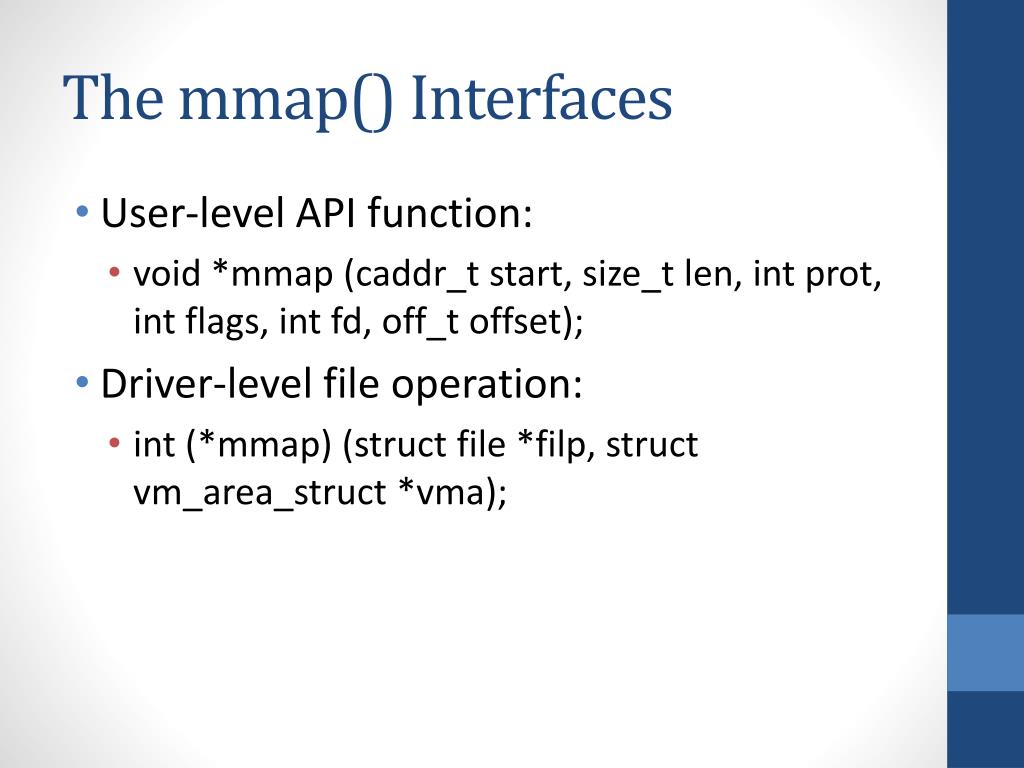

The mmap() system call takes several arguments, including:

- File descriptor: This represents the file to be mapped.

- Offset: This specifies the starting position within the file to be mapped.

- Length: This defines the size of the file region to be mapped into memory.

- Protection flags: These determine the access permissions for the mapped region, such as read-only, write-only, or read-write.

- Flags: These specify additional options, such as whether the mapping should be shared between multiple processes or whether changes to the mapped region should be written back to the file.

Upon successful execution, mmap() returns a pointer to the mapped region in memory. This pointer allows the program to access and manipulate the file data as if it were in memory, using standard memory access operations.

The Advantages of Memory Mapping

Memory mapping offers several advantages over traditional file I/O techniques, making it a valuable tool for a wide range of applications:

- Enhanced Performance: Eliminating the overhead associated with read and write operations significantly improves data access speeds, particularly for large files. This is due to the direct mapping between memory and file, bypassing the need for data transfer through the kernel.

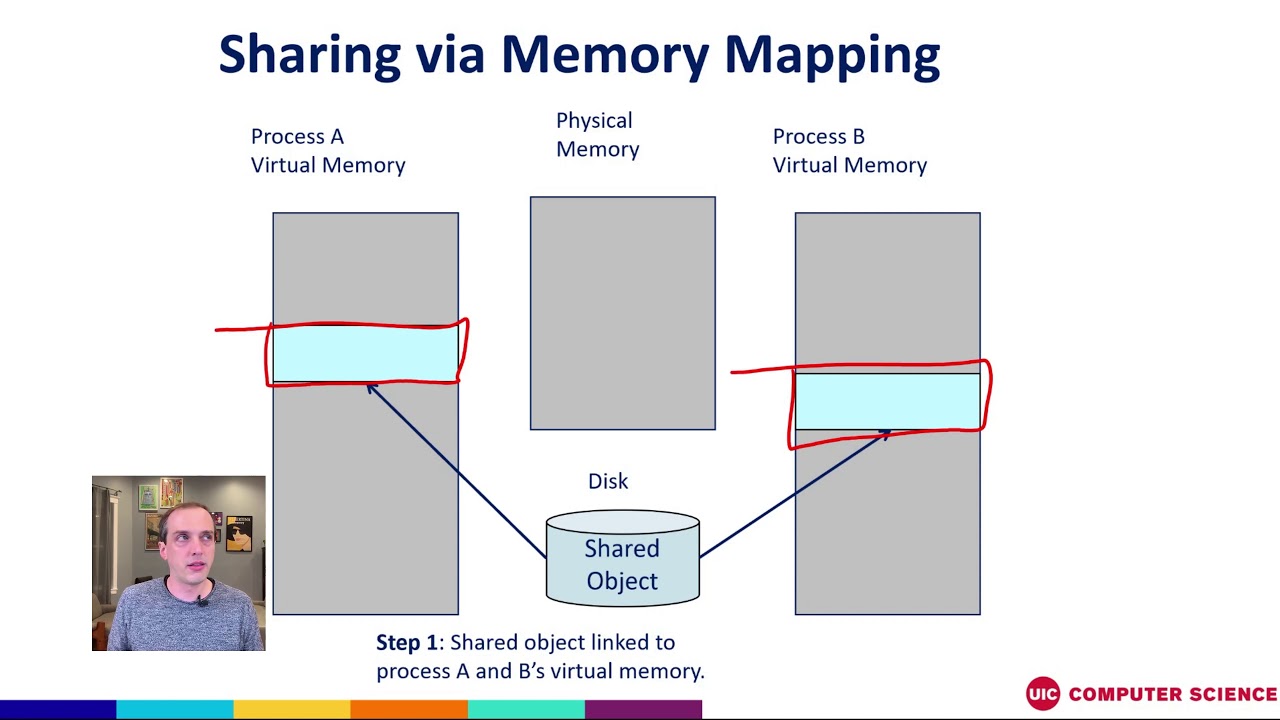

- Shared Memory: Memory mapping facilitates efficient inter-process communication by allowing multiple processes to share the same mapped file region. This enables processes to collaborate seamlessly, exchanging data without the need for explicit data transfer mechanisms.

- Simplified Data Management: By treating files as memory segments, memory mapping simplifies data handling. It eliminates the need for complex file I/O routines, streamlining code and improving maintainability.

- Efficient Data Modification: Modifications made to the mapped region are automatically reflected in the underlying file, eliminating the need for explicit write operations. This simplifies data manipulation and ensures consistency between the in-memory representation and the file on disk.

- Memory-Mapped Files: Memory mapping enables the creation of memory-mapped files, which are essentially files that exist solely in memory and are not stored on disk. This allows for the creation of temporary files or data structures that are ephemeral and do not persist after the program terminates.

Applications of Memory Mapping

Memory mapping finds widespread applications across various domains, including:

- Database Systems: Memory mapping is extensively used in database systems to manage large data sets efficiently. By mapping data files into memory, databases can access and manipulate data directly, achieving significant performance gains.

- Image and Video Processing: Image and video processing applications often deal with large amounts of data. Memory mapping enables efficient loading and manipulation of images and video frames, accelerating processing tasks.

- Web Servers: Web servers leverage memory mapping to improve the performance of file serving. By mapping static files into memory, web servers can serve requests directly from memory, eliminating the need for disk access and reducing latency.

- Scientific Computing: Memory mapping plays a crucial role in scientific computing, enabling efficient manipulation of large datasets and facilitating parallel processing across multiple cores.

- Text Editors and IDEs: Text editors and integrated development environments (IDEs) employ memory mapping to provide fast and responsive editing experiences. By mapping large files into memory, they can efficiently handle editing operations and maintain a consistent view of the document.

Challenges and Considerations

While memory mapping offers numerous benefits, it is essential to be aware of potential challenges and considerations:

- Memory Consumption: Memory mapping requires sufficient RAM to accommodate the mapped file region. Large files can consume substantial memory, potentially leading to performance issues or even system instability if available memory is insufficient.

- Memory Management: It is crucial to manage the mapped memory region effectively, ensuring that it is unmapped when no longer needed to prevent memory leaks and resource exhaustion.

- Concurrency Issues: When multiple processes share a mapped file region, careful synchronization mechanisms are required to prevent data corruption or race conditions.

- File System Limitations: Memory mapping relies on the underlying file system’s support for efficient file mapping. Some file systems may have limitations or performance bottlenecks that impact the effectiveness of memory mapping.

Understanding mmap: Frequently Asked Questions

1. What is the difference between memory mapping and traditional file I/O?

Memory mapping provides a direct connection between a file and a region of memory, allowing programs to access and manipulate file data as if it were in memory. Traditional file I/O involves explicit read and write operations, transferring data between the kernel and user space.

2. How does memory mapping improve performance?

Memory mapping eliminates the overhead associated with traditional file I/O, such as kernel calls and data transfer between memory and disk. It provides direct access to file data, significantly reducing latency and improving performance.

3. What are the benefits of shared memory mapping?

Shared memory mapping enables multiple processes to access and modify the same data region in memory, facilitating efficient inter-process communication and data sharing.

4. How do I ensure that changes made to a mapped region are written back to the file?

By default, changes made to a mapped region are not automatically written back to the file. To ensure persistence, you can use the MS_SYNC flag when calling mmap() or explicitly call the msync() system call.

5. What are the limitations of memory mapping?

Memory mapping can consume significant memory, potentially leading to performance issues or system instability if available memory is insufficient. It also relies on the underlying file system’s support for efficient file mapping.

Tips for Effective Memory Mapping

- Optimize Memory Usage: Carefully choose the size of the mapped region to minimize memory consumption while ensuring sufficient data access.

-

Use Appropriate Flags: Select the appropriate protection and flag options for

mmap()to control access permissions, sharing behavior, and data persistence. - Implement Synchronization Mechanisms: When multiple processes share a mapped file region, implement synchronization mechanisms to prevent data corruption and race conditions.

- Monitor Memory Usage: Regularly monitor memory usage to identify potential issues related to excessive memory consumption or leaks.

- Consider Alternative Techniques: If memory mapping is not suitable for a particular application, consider alternative techniques, such as file caching or other forms of inter-process communication.

Conclusion

Memory mapping, implemented through the mmap() system call, provides a powerful and versatile mechanism for managing memory and files in Unix systems. It offers significant performance advantages, simplifies data management, and facilitates efficient inter-process communication. By understanding the mechanics, benefits, and potential challenges of memory mapping, developers can leverage this powerful tool to enhance the performance and efficiency of their applications. However, it is crucial to carefully consider memory consumption, synchronization, and file system limitations to ensure optimal utilization of this valuable resource.

Closure

Thus, we hope this article has provided valuable insights into The Power of Memory Mapping: Understanding mmap in Unix Systems. We thank you for taking the time to read this article. See you in our next article!