Unlocking Performance: A Deep Dive into Memory-Mapped Files with Uncached Access

Related Articles: Unlocking Performance: A Deep Dive into Memory-Mapped Files with Uncached Access

Introduction

In this auspicious occasion, we are delighted to delve into the intriguing topic related to Unlocking Performance: A Deep Dive into Memory-Mapped Files with Uncached Access. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

Unlocking Performance: A Deep Dive into Memory-Mapped Files with Uncached Access

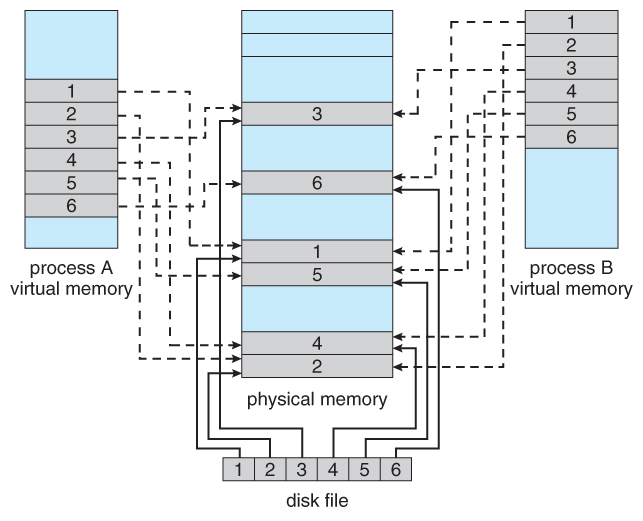

Memory-mapped files, a powerful mechanism in operating systems, bridge the gap between traditional file I/O and direct memory access. This technique allows applications to treat a file on disk as if it were a contiguous block of memory, enabling efficient data manipulation and bypassing the overhead of explicit read and write operations. However, the default behavior of memory-mapped files often involves caching, which while beneficial in many scenarios, can introduce performance bottlenecks when dealing with large datasets or frequent updates. This is where the concept of uncached memory-mapped files comes into play, offering a path to significantly enhance performance in specific use cases.

Understanding the Essence of Caching and its Impact

Caching is a fundamental optimization technique employed by operating systems to accelerate data access. When a file is memory-mapped, the system typically creates a cache, a temporary copy of the file’s data in memory. Subsequent read and write operations primarily interact with this cache, reducing the need to access the disk, thus improving performance.

However, caching can introduce latency when dealing with specific scenarios:

- Large Datasets: If a file is massive, the entire cache might not fit in memory, leading to frequent disk accesses as data is swapped in and out.

- Frequent Updates: When data is frequently modified, the cache needs constant updates, adding overhead to the process.

- Real-Time Applications: Applications requiring low latency and deterministic behavior might find caching unpredictable, as cache eviction policies can introduce delays.

The Power of Uncached Memory-Mapped Files

Uncached memory-mapped files provide a solution to these challenges by directly interacting with the underlying file on disk, bypassing the cache entirely. This direct access eliminates the overhead associated with cache management, resulting in significant performance improvements in specific scenarios.

Key Benefits of Uncached Access:

- Reduced Latency: By eliminating the cache layer, uncached memory-mapped files minimize the time taken to access data, particularly for large files or frequent updates. This is crucial for real-time applications where responsiveness is paramount.

- Deterministic Behavior: Without the unpredictable nature of caching, uncached access ensures consistent and predictable performance, making it suitable for applications requiring precise timing and control.

- Enhanced Data Integrity: Uncached access eliminates the possibility of data inconsistencies arising from cache-related issues, ensuring data integrity, especially in scenarios involving concurrent modifications.

- Optimized Resource Utilization: By avoiding the allocation of memory for a large cache, uncached access optimizes resource utilization, particularly when dealing with massive files that might overwhelm available memory.

Practical Applications of Uncached Memory-Mapped Files:

- High-Performance Data Processing: Applications requiring fast data processing, such as scientific simulations, financial modeling, or real-time data analysis, can benefit significantly from uncached access, enabling rapid data manipulation and analysis.

- Database Systems: Uncached memory-mapped files can enhance the performance of database systems, especially when dealing with large data files or frequent updates.

- Embedded Systems: Uncached access is particularly valuable in embedded systems with limited memory resources, where caching can lead to performance bottlenecks.

- Real-Time Applications: Applications requiring low latency and deterministic behavior, such as control systems, audio/video processing, or financial trading systems, can benefit from uncached access to ensure responsiveness and predictability.

Implementing Uncached Memory-Mapped Files

The specific implementation details of uncached memory-mapped files vary across operating systems and programming languages. However, the general principle involves specifying a flag or option to the memory-mapping function, indicating that caching should be disabled.

For instance, in the POSIX standard, the mmap() function accepts the MAP_LOCKED and MAP_POPULATE flags to control caching behavior. By setting MAP_LOCKED, the system ensures that the memory pages are locked in physical memory, preventing them from being swapped out to disk. The MAP_POPULATE flag triggers an initial read of the file’s data into memory, effectively bypassing the cache on subsequent accesses.

Important Considerations:

- Performance Trade-offs: While uncached access can significantly improve performance in specific scenarios, it is crucial to understand that it comes with trade-offs. Direct disk access can be slower than accessing cached data, especially for sequential reads.

- Memory Management: Uncached access places a greater burden on memory management, as the entire file is mapped into memory. This can lead to memory pressure and potential performance degradation if the system runs out of memory.

- Data Synchronization: When multiple processes or threads access the same uncached memory-mapped file, careful synchronization mechanisms are essential to prevent data corruption.

FAQs about Uncached Memory-Mapped Files

Q: When should I use uncached memory-mapped files?

A: Uncached memory-mapped files are beneficial when dealing with large datasets, frequent updates, real-time applications, or scenarios requiring deterministic performance and data integrity. However, it is crucial to consider the trade-offs, such as potential performance degradation for sequential reads and increased memory pressure.

Q: How do I implement uncached memory-mapped files?

A: The implementation details vary across operating systems and programming languages. Refer to the documentation for your specific platform and programming language to understand the flags or options used to disable caching.

Q: Are there any drawbacks to uncached memory-mapped files?

A: Yes, uncached access can be slower for sequential reads compared to cached access, and it can lead to memory pressure if the file is large. Additionally, careful synchronization is required for concurrent access to prevent data corruption.

Q: Can I use uncached memory-mapped files for all applications?

A: No, uncached memory-mapped files are not suitable for every application. They are most beneficial in scenarios where performance gains outweigh the trade-offs, such as real-time applications or high-performance data processing.

Tips for Using Uncached Memory-Mapped Files

- Profile your application: Before using uncached access, carefully profile your application to identify the bottlenecks and determine if uncached access would be beneficial.

- Consider memory pressure: Ensure that your system has sufficient memory to handle the entire file mapped into memory, especially for large files.

- Implement proper synchronization: If multiple processes or threads access the same uncached file, implement appropriate synchronization mechanisms to prevent data corruption.

- Use appropriate flags: Carefully select the flags or options when using the memory-mapping function to ensure that caching is disabled.

Conclusion

Uncached memory-mapped files offer a powerful mechanism for enhancing performance in specific scenarios by bypassing the cache layer and directly accessing the underlying file on disk. This approach is particularly valuable for applications dealing with large datasets, frequent updates, real-time requirements, or scenarios where deterministic behavior is paramount. However, it is essential to understand the trade-offs associated with uncached access, such as potential performance degradation for sequential reads and increased memory pressure. By carefully considering the application’s requirements and the trade-offs involved, developers can leverage uncached memory-mapped files to unlock significant performance gains and achieve optimal results.

Closure

Thus, we hope this article has provided valuable insights into Unlocking Performance: A Deep Dive into Memory-Mapped Files with Uncached Access. We appreciate your attention to our article. See you in our next article!