Unlocking Performance: A Deep Dive into Memory Mapping and Cache Management

Related Articles: Unlocking Performance: A Deep Dive into Memory Mapping and Cache Management

Introduction

With enthusiasm, let’s navigate through the intriguing topic related to Unlocking Performance: A Deep Dive into Memory Mapping and Cache Management. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: Unlocking Performance: A Deep Dive into Memory Mapping and Cache Management

- 2 Introduction

- 3 Unlocking Performance: A Deep Dive into Memory Mapping and Cache Management

- 3.1 The Role of Caching in Memory Mapping

- 3.2 The Importance of Uncache

- 3.3 Uncache in Action: A Case Study

- 3.4 Techniques for Uncaching

- 3.5 Considerations for Uncaching

- 3.6 Frequently Asked Questions about Uncache

- 3.7 Tips for Optimizing Memory Mapping with Uncache

- 3.8 Conclusion

- 4 Closure

Unlocking Performance: A Deep Dive into Memory Mapping and Cache Management

Memory mapping, a powerful technique employed by operating systems, allows applications to directly access files and other resources as if they were part of the process’s address space. This seamless integration streamlines data handling, eliminating the need for explicit file I/O operations and enhancing efficiency. However, the interplay between memory mapping and the system’s caching mechanisms can introduce complexities that impact performance. Understanding these dynamics, particularly the concept of "uncache," is crucial for optimizing memory-mapped operations and achieving optimal performance.

The Role of Caching in Memory Mapping

Memory mapping fundamentally relies on the operating system’s caching mechanism. When a process maps a file, the system does not immediately load the entire file into memory. Instead, it creates a mapping entry in the virtual memory space, associating it with the file’s contents. The actual data is fetched only when the process attempts to access specific regions of the mapped file. This demand-driven approach significantly reduces memory overhead and improves system responsiveness.

The operating system employs a cache to store frequently accessed data, including portions of mapped files, in fast memory. This caching strategy further accelerates data access, as subsequent requests for the same data can be served directly from the cache, bypassing the slower disk access.

The Importance of Uncache

While caching enhances performance in most scenarios, it can become a bottleneck in specific situations, particularly when dealing with frequently modified data within mapped files. When a process writes data to a memory-mapped file, the system may not immediately flush the changes to the underlying disk. Instead, it updates the cached copy of the file in memory. This approach, while efficient for read-intensive workloads, can lead to data inconsistency if the process crashes or the system experiences a power failure before the cached updates are written back to the disk.

Here, the concept of "uncache" comes into play. Uncaching involves explicitly instructing the operating system to bypass the cache and write changes directly to the underlying file. This ensures data integrity and consistency, even in scenarios where the process terminates unexpectedly.

Uncache in Action: A Case Study

Consider a scenario where a process is responsible for real-time data logging. The process writes data to a memory-mapped file, with each write operation updating a specific record within the file. If the process crashes before the cached updates are written to disk, the logged data will be incomplete, potentially leading to data loss.

By employing uncache, the process can guarantee that every write operation is immediately reflected on the disk. This approach eliminates the risk of data inconsistency and ensures data integrity, even in the event of system failures.

Techniques for Uncaching

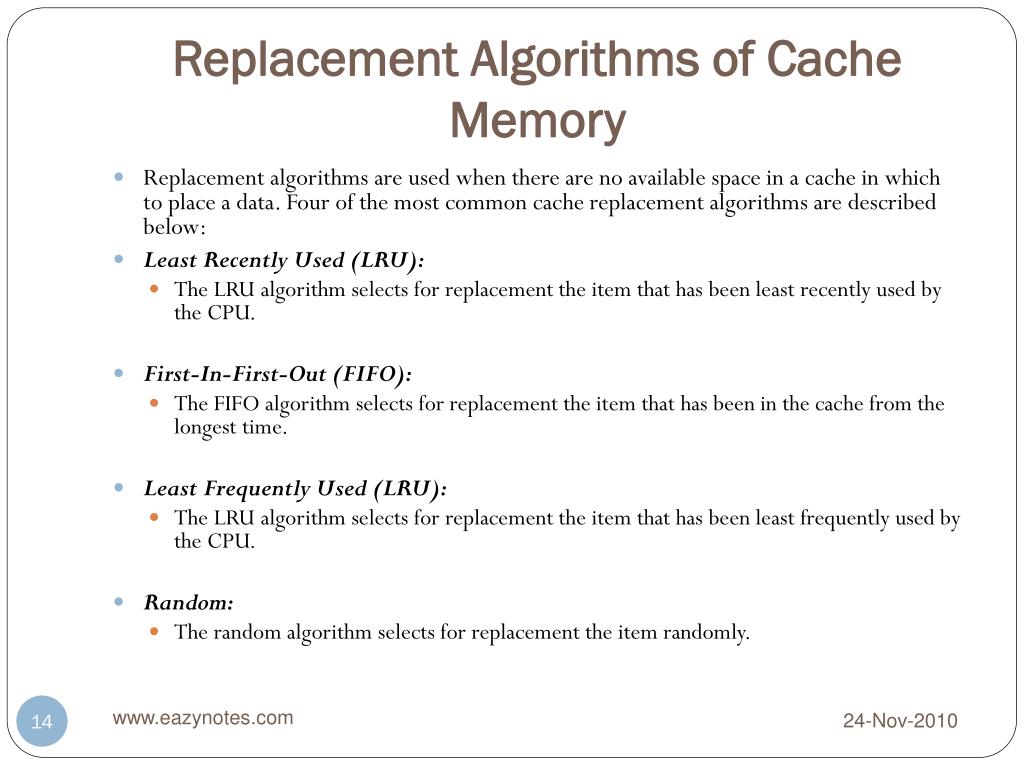

Several techniques can be employed to achieve uncache behavior, each with its own advantages and disadvantages:

1. Explicit System Calls: Operating systems provide system calls specifically designed to manipulate the caching behavior of memory-mapped files. For example, in POSIX systems, the msync() function allows applications to explicitly flush cached data to disk. This approach offers fine-grained control over the caching behavior, enabling applications to selectively uncache specific regions of the mapped file.

2. Memory Mapping Flags: When creating a memory mapping, certain flags can be specified to influence the caching behavior. For example, the MAP_SYNC flag in POSIX systems instructs the operating system to flush data to disk immediately after each write operation. This approach simplifies uncaching, eliminating the need for explicit msync() calls.

3. File System Options: Some file systems provide options to control the caching behavior of files. For example, the noatime mount option in ext4 disables the tracking of file access times, which can reduce the overhead associated with updating the file system metadata.

4. Hardware-Assisted Uncaching: Certain hardware platforms offer dedicated mechanisms for uncaching, providing hardware-level support for bypassing the system cache. These mechanisms can significantly improve performance, particularly for applications that require frequent and consistent data updates.

Considerations for Uncaching

While uncaching ensures data integrity, it can come at the cost of performance. Frequent uncache operations can lead to increased disk I/O activity, potentially slowing down the application. It’s crucial to carefully consider the trade-offs between data integrity and performance when deciding whether to employ uncaching.

Furthermore, uncaching can introduce complexity into application code, requiring developers to manage the caching behavior explicitly. This complexity can make the code more difficult to maintain and debug.

Frequently Asked Questions about Uncache

1. Is uncache always necessary?

Uncaching is not always necessary. If the application is read-intensive and data consistency is not a critical concern, caching can significantly improve performance. However, for applications that write frequently modified data, uncaching is essential to ensure data integrity.

2. What are the performance implications of uncaching?

Uncaching can impact performance, as it increases disk I/O activity. The performance impact depends on several factors, including the frequency of uncache operations, the size of the data being written, and the underlying hardware.

3. How can I measure the performance impact of uncaching?

Benchmarking is crucial to assess the performance impact of uncaching. By measuring the execution time of the application with and without uncaching, you can quantify the performance difference.

4. Are there any alternatives to uncaching?

Alternatives to uncaching include using a write-ahead log (WAL), which ensures data consistency by logging all writes before committing them to the underlying storage. Another option is to use a database that supports transactional operations, guaranteeing data integrity even in the event of system failures.

5. How can I determine if uncaching is appropriate for my application?

Consider the following factors:

- Frequency of data modification: If the application frequently modifies data in memory-mapped files, uncaching may be necessary.

- Data consistency requirements: If data consistency is critical, uncaching is essential.

- Performance requirements: If performance is a concern, the potential impact of uncaching on performance should be carefully considered.

Tips for Optimizing Memory Mapping with Uncache

- Minimize uncache operations: Only uncache data that requires immediate persistence to disk.

- Optimize write operations: Batch writes together to reduce the number of uncache operations.

- Use asynchronous I/O: Asynchronous I/O allows the application to continue processing while data is being written to disk, minimizing the performance impact of uncaching.

- Consider using a dedicated cache: If uncaching is necessary, consider using a dedicated cache for frequently accessed data, minimizing the need to uncache frequently.

- Monitor performance: Monitor the performance of the application with and without uncaching to identify potential bottlenecks and optimize accordingly.

Conclusion

Uncaching is a powerful technique for ensuring data integrity in memory-mapped operations. By bypassing the system cache and writing changes directly to the underlying file, uncaching eliminates the risk of data loss in the event of system failures. However, it’s crucial to carefully consider the trade-offs between data integrity and performance when deciding whether to employ uncaching. By understanding the interplay between memory mapping, caching, and uncaching, developers can optimize their applications for both performance and data consistency.

Closure

Thus, we hope this article has provided valuable insights into Unlocking Performance: A Deep Dive into Memory Mapping and Cache Management. We hope you find this article informative and beneficial. See you in our next article!